How to use DuckDB to query Parquet file?

On of the good thing of DuckDB is that they are not inventing new data structure it works with the current data we have like csv, json and parquet.

Today we will learn how to query data from parquet file with DuckDB.

What is Parquet file?

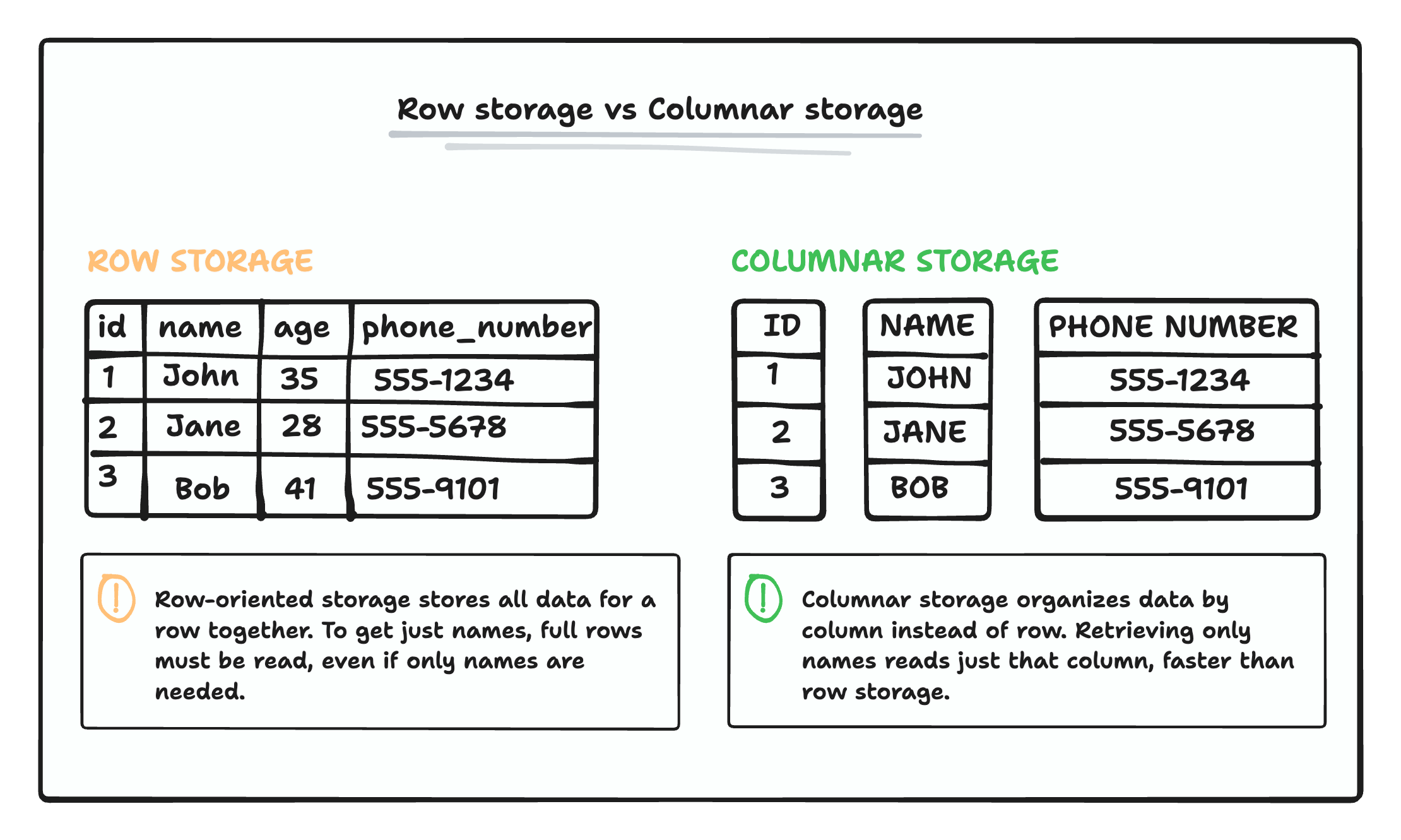

Parquet is a columnar storage file format that is optimized for use with big data processing frameworks. Columnar storage is mainly used for processing data for analytics.

Here's quick illustration how it different between columnar and row data storage.

Since DuckDB mainly focuses on analytics, it also supports working with parquet files out of the box.

Load parquet file into DuckDB

Import parquet and run query directly.

SELECT * FROM read_parquet('salary.parquet');

SELECT Club FROM read_parquet('salary.parquet') GROUP BY Club LIMIT 20;

Import parquet file into table.

CREATE TABLE salary AS SELECT * FROM read_parquet('salary.parquet');

Export table into Parquet file

You can also export your table into parquet file like this:

COPY (SELECT * FROM salary) TO 'salary.parquet' (FORMAT PARQUET);

Import parquet file from https

One of the cool features of DuckDB is that we can import data from the internet via HTTP file system extensions. To do that, first we need to install the HTTP file system extension.

INSTALL httpfs;

Then load the extensions:

LOAD httpfs;

Now we can import parquet file like this:

SELECT * FROM read_parquet('https://res.cloudinary.com/dr15yjl8w/raw/upload/v1700148540/salary_nrntcv.parquet');

You can also import parquet file from cloud storage like amazon s3 or google cloud storage, read more detail about it here.